Agents for Advanced Statistical Analysis

On the 15th of October Erik MD gave a quick demo at AI Tinkerers Paris about our first version of BigWig. This is a short writeup based on these slides Agents for building ML models.

The slides and the demo are a bit outdated, as we are moving fast, but the core ideas are the same.

There are three parts to the talk/demo:

- Explanation of how agentic workflows work within the Infer platform

- How more generally agents can be used for complex higher-level reasoning

- Some thoughts on what comes next

The Infer Platform

The Infer platform splits into 3 layers stacked on top of each other:

The Core Layer

An unified real-time SQL interface combining data retrieval, statistical modelling and large-scale compute in one single and simple interface.

The Application Layer

A user interface that abstracts away the core layer, enabling users to build, deploy and integrate machine learning models across their data stack.

The Agent Layer

An agentic framework built on top of the core layer to enable autonomous workflows and analysis.

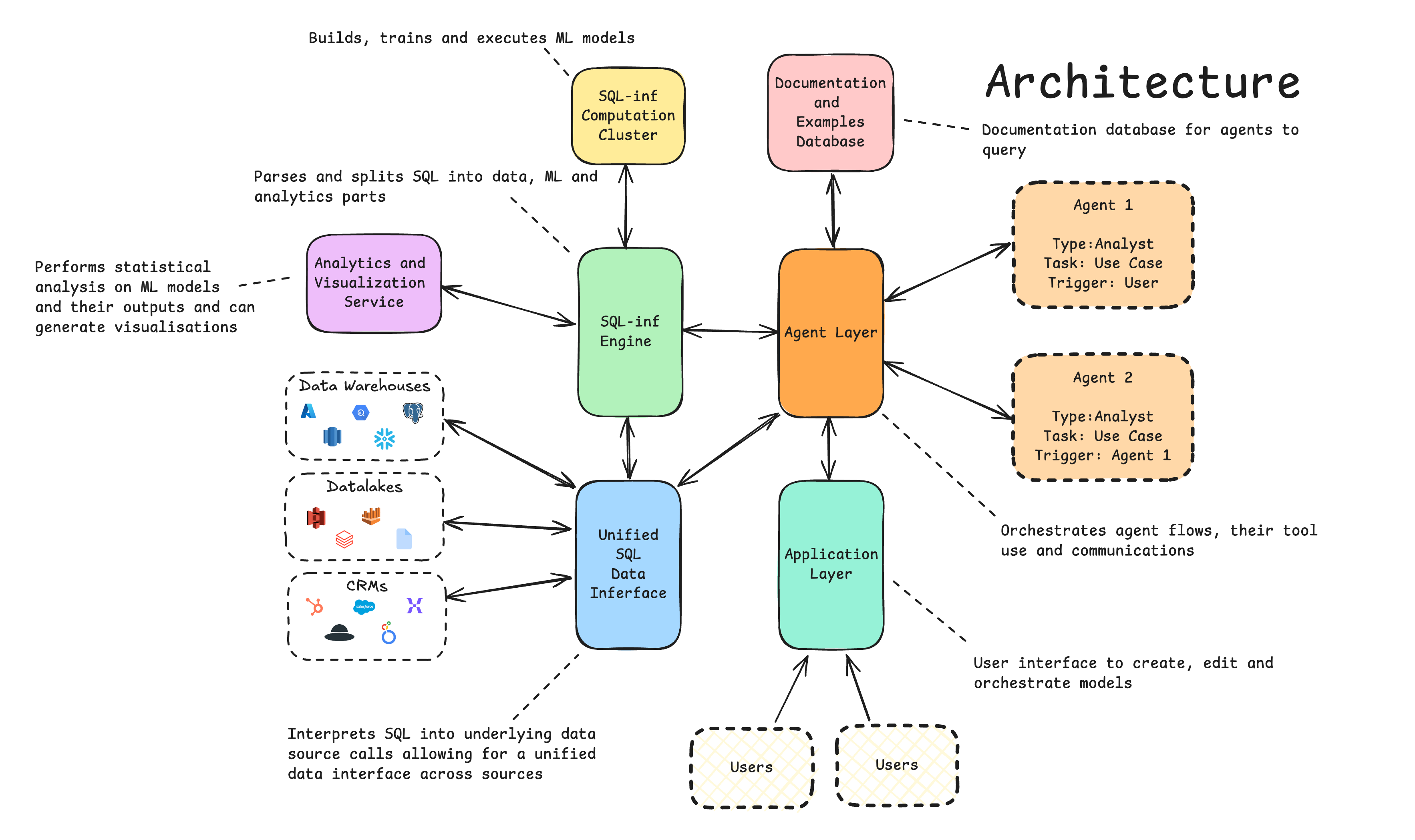

Platform Architecture

The platform consists of a number of interconnected modules. All of which the agents operating on top of the Agent Layer can use and interact with. These modules allow the agents to perform a wide range of tasks, from data retrieval and simple EDA analysis to model building and large scale inference.

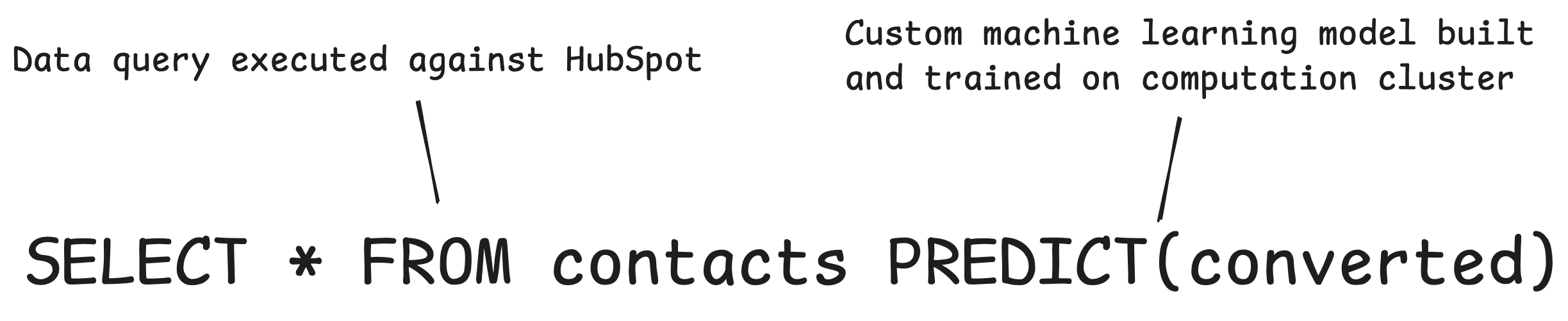

SQL-inf - Unifying Data, Modelling and Compute

The common interface for interacting with the platform is through SQL-inf.

SQL-inf is a unified interface for

- retrieving, modelling and joining data across a wide range of sources

- statistical analysis and model building

- running large-scale compute

As an example of how SQL-inf works, this is how easy it is to model, build and execute a custom lead scoring model on your HubSpot data in a single, simple SQL statement:

SQL-inf and Agents

SQL-inf is Perfect for Agents!

SQL-inf and the Core layer lends itself extremely well to agentic flows by giving them a simple layer of software abstraction for querying data and building machine learning and statistical models.

How it works

- Agents render LLM inputs using Jinja enriched with agent history and other properties

- Jinja used for both templating and control-flow

- The type and task of an agent determines what Jinja templates to use

- LLM used is Anthropic Claude-Sonnet 3.5

- Agents are orchestrated through a simple statemachine across all agents, meaning they run on a shared synchronised clock

- Agent states are persisted using Mongo

Why does this work so well when copilots and other agents do not?

LLMs are great at acting as a good analyst at a high-level - they have read a lot of medium articles and course note about that!

They are also pretty decent at writing SQL - maybe not so much zero-short text->SQL generation but through an iterative process, with a clear measure of quality for each step, they tend to converge to a good, stable answer

They are also really good at tool use - if the tools are well-defined, behave well and return well-structured outputs

What makes a good agent

Why agents work so well for Infer revisited

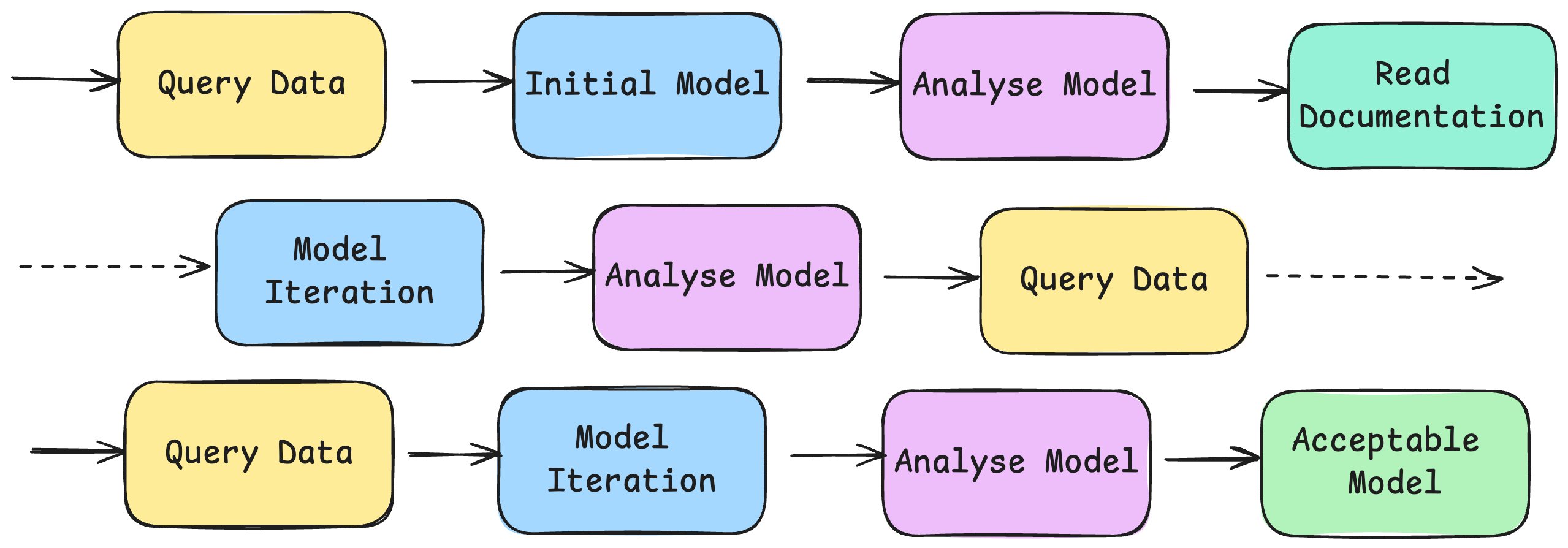

We have well-defined software abstractions that wrap complicated implementations - reducing variability and instability

We have an objective scoring model for each step, in our cases statistical measures on models combined with some heuristics

This scoring allow the agents to effectively search for a converging solution through chained actions - locally unstable, but globally stable

We use LLMs on well-learned domains: high-level analyst reasoning(good) and SQL generation(okay-ish)

A simple example

A simple example of an agentic flow for building a machine learning model

What comes next

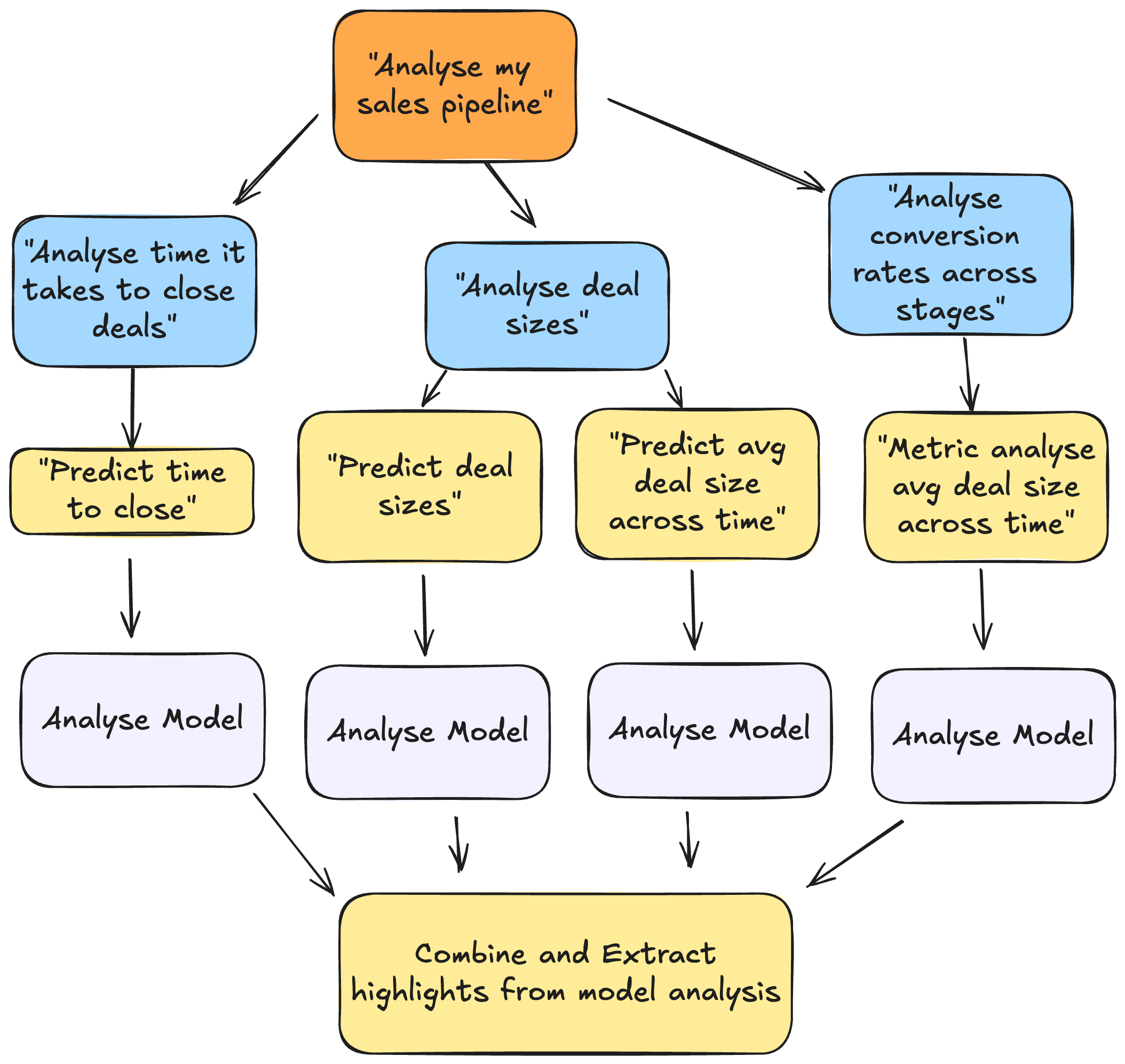

Higher level reasoning agents - using current single flow agents in a multi-agent environment to solve higher level task

Reinforcement learning on reasoning steps - new reasoning model on top of current agents, to improve local stability of reasoning chains

Still some challenges to overcome

- Numerical methods are often noisy and outputs are messy, making it at times difficult to control the agents - still stable globally but lengthy detours occur

- Depending on data sets, numerical methods can also fail in non-obvious ways during tasks like calibration or optimisation, which is difficult for LLMs (and humans!) to iterate on and respond well to

Some final thoughts

Is this something akin to System 2 thinking?

I don't know - but it is definitely pretty impressive and it is well beyond System 1 smarter RPA/"workflow automation" thinking - perhaps System 1.5?

If not quite System 2 yet, I think we have the beginning of a general autonomous system for performing statistical modelling and analysis - and that is definitely, from human perspective, System 2!

It also brings up some new interesting aspects for the future of analays:

- Infinite scale: We are no longer constrained by human resources, we can create as many models and run as much analysis as we like

- Wasted work is okay: Scale and low-cost, means you can try any odd piece of analysis and if it doesn't work out that is okay

- Everything becomes backend: With agents performing most, if not all, of the work the role of the frontend changes completely. It is no longer about doing the work, but presenting the results